The video that concerned me was this (the part about performance comes around the 6:50’ mark).

I’ve been using the term more generically, not just as an Apple brand.

In general, a “retina” display is one with resolution high enough that you can not distinguish pixels at a normal viewing distance. For a desktop display, this may be around 200ppi. For a phone display, it may need to be higher (maybe >300 ppi), because these screens are typically held closer to your eyes.

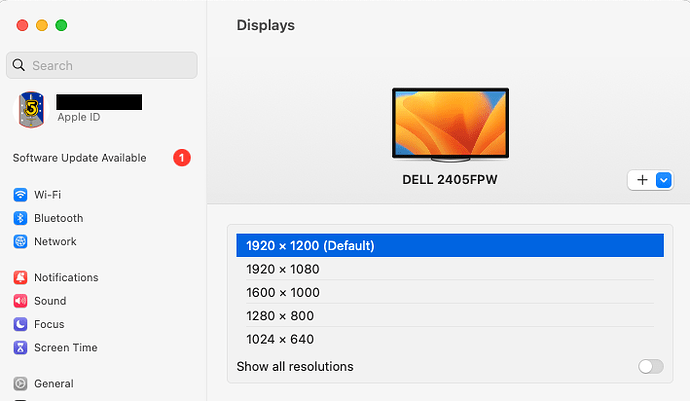

FWIW, my desktop display is a 24" screen at 1920x1200 resolution. This is about 94ppi, and is NOT considered Retina by most people. A 4K (3840x2160) at 24" is 182ppi, which may or may not be considered “retina”. At 27", it’s 162ppi.

Some apps call these displays “HiDPI”, probably in order to avoid treading on Apple trademarks.

But that’s irrelevant. My arguments are not based on whether or not someone will use the term, but how macOS treats the display. For a non-retina display (like mine), Apple presents a set of resolutions for you to choose from, and does not scale its output:

A display that macOS considers “retina” doesn’t present a set of resolutions (unless you jump through some hoops to get the list). Instead, it only outputs the display’s native resolution and presents settings to let you configure the scaling factor for the user interface:

Apple auto-detects their own monitors as HiDPI (“retina”) capable. I think (but don’t know for sure) that it can perform this detection for some third-party displays (I would assume those that can report their physical size and therefore allow macOS to compute it’s native DPI).

I did some web searching to see about how to trick macOS into thinking you have a HiDPI display when it doesn’t auto-detect one. I found a lots of tips, tricks and apps which claim to do this, but it appears that most of them stopped working with the advent of Apple Silicon, which really sucks.

But here are a few articles that might help:

- This Mac display hack is a must for 1440p displays | Macworld

- This is why your external monitor looks awful on an M1 Mac • The Register

- https://developer-book.com/post/how-to-set-up-hidpi-on-mac/

Sorry I can’t give you anything more concrete at this time. I was actually very surprised to learn that Apple killed off techniques that used to work. But I think it’s definitely worth giving some of these a try. If they don’t work, you haven’t lost more than a bit of time, and if they do work, it will probably look better than selecting a lower resolution.

Ah. He’s not wrong. Display scaling at a non-integer ratio does require your GPU to scale the screen. Yes, HiDPI scaling renders your desktop at 2x your configured “effective” resolution and then scales it to your display’s native resolution.

But note that he only noticed the GPU load problem because he’s doing video rendering, which is a massively GPU-intensive application.

If you are using GPU-intensive applications, then you may come to the conclusion that he came to. But if you’re not (e.g. doing more generic computing - office, web etc.) then you’ll probably have more than enough GPU power to spare and may not notice any problem.

No hoop jumping required. It’s one button away. See @aforkosh’s excellent post above.

This might have been different on ancient OS X versions or legacy Intel Macs, but on halfway recent macOS versions and Apple Silicon this has become quite simple and transparent.

Of course it can. In fact, I haven’t come across any 4K DP display in a couple years that wasn’t immediately recognized by macOS as scalable “retina”.

I’d argue you’d need to try to hook up a rather old or low-res monitor (or perhaps use some kind of obscure connection) to run into any kind of issue here. If OTOH you buy a screen today that you hook up via DP or USB alt mode, and it offers reasonably high res, macOS will offer scalings right away.

This discussion has gone all over the place, but as I suspected, what this guy is talking about doesn’t apply to most users. He’s doing high-end graphics work with demanding applications like Blender and really pushing the GPU. An average user doesn’t have to worry about GPU usage at all. In my work as a broadcast/production engineer I’ve connected all kinds of monitors to all kinds of Macs (and PCs) and never give a thought to this, even for editing systems.

I will add that I stay away from Apple’s displays as a rule because they are expensive, quirky and have limited connectivity. Apple’s attempts to make equipment “just work” is fine for non-technical uses, but gets in the way in some cases. And the marketing hype (Retina!) obscures the reality of what people actually need. I often spec Dell Ultrasharp displays for general purpose use, and I generally prefer LG over Samsung. My wife is using a calibrated BenQ monitor for photo work.

Get the display that fits your budget for the size you want, and what your eyes can actually see (mine no longer benefit from ridiculously high resolutions because everything is too damn small).

– Eric

Oh, thanks David, that was all very informative. The fog is starting to clear on this issue. With respect to the video, I was more or less thinking the same, since I’m not doing video rendering (or even using Photoshop). Most of my work is text-based so I’m not putting a massive load on the GPU.

FWIW, the easy solution would be switching resolutions to native or exactly half native just before doing serious rendering. Generally, Youtube videos don’t get lots of views for keeping things in perspective.