I’ve just been playing with the camera on my new iPhone 13 Pro and discovered the digital zoom feature. Tap and hold on the 3x zoom button to get the adjustment, rack it up to 15x, and the result is spectacularly good. It’s MUCH sharper than taking a photo at 3x then enlarging it later. In Photoshop, pixelation in the 15x setting is scarcely noticeable at a scale where the 3x is hopelessly blocky. There must be some clever digital magic going on here. Perhaps this is already widely known, but I certainly didn’t!

As I understand it, the digital zoom uses image data from all three camera lenses in order to generate a composite image (using Apple’s image processing hardware). In contrast, if you select one of the optical zoom resolutions, you just get the data from a single lens.

That’s interesting. But I still don’t understand how the 15x works - the wider-angle lenses (0.5 and 1) surely couldn’t increase the resolution of the 3x telephoto? I can see that it could work the other way round - except that the field of view of the telephoto would obviously be smaller so it would only sharpen the centre of the image.

Just to clarify - I can understand how combining the three lenses can create a smooth zoom to intermediate focal lengths between the fixed ones, but I don’t see how it can increase the telephoto beyond 3x except by simply enlarging the image as you would in Photos or Photoshop. In which case you’re losing pixels and reducing the resolution. The iPhone 13’s digital zoom must be doing something much cleverer.

I don’t know what Apple is actually doing, but the wider-angle lenses may provide context for some kind of machine-learning-based interpolation process (e.g. is a region a gradient that should be smoothed or an edge between objects which should be sharpened).

This would be especially true for content near the edge of the telephoto image. The wide-angle images can provide lower-resolution data representing content beyond the edge of the telephoto image, which may be able to provide context that would otherwise be completely absent.

Could be, but in fact it greatly enhances sharpness over the whole image. Maybe it’s just a standard sharpening technique I haven’t come across before. However it’s done, it makes digital zoom far more useful - I’ve always considered it a waste of time. In effect the iPhone 13 Pro offers a telephoto much better than 3x and I’m puzzled that Apple doesn’t publicise it more. It would have helped my decision to buy!

Thanks for this fantastic tip. I had no idea.

Not the best framing, but these are seconds apart, I think the 1x standard camera and then 10x zoom.

And then the first photo cropped.

Great photos! It’s astonishing, isn’t it?

This is really interesting! It makes sense that Apple would be using its computational photography chops to improve the quality of a digitally zoomed photo beyond what would be possible by simply cropping out the same section of a photo and expanding it. But I had always shied away from digital zoom under the theory that it wouldn’t be as good as optical zoom. That’s undoubtedly true, but it misses the point if the digital zoom is still better than manual crop/expand zooming afterward.

Can others try some test photos along these lines? My results with the iPhone 13 Pro seem unequivocal in recommending high digital zooms in favor of later cropping:

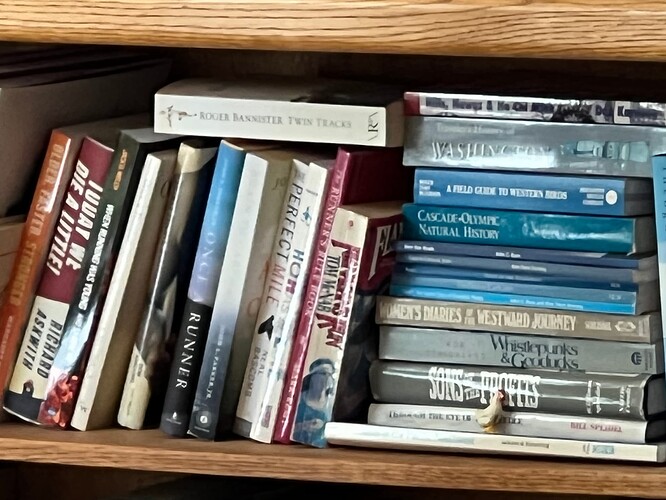

1X shot of my living room

15X shot of the books in the previous picture

Crop of the 1X shot to match the 15X shot

(The trick to getting the cropped photo to the same size here in Discourse is to click one of the % links under the image in the preview pane, and then edit the percentage in the compose pane to something larger to make the preview the right size—I had to use 240% for this, and 520% for @ddmiller’s photo above. It should look like this.)

Wow. Clearly, this isn’t some simple stretch-and-filter algorithm. I’m guessing that they’ve trained some kind of neural-network filter for doing the zoom, where it can selectively sharpen/blur different objects based on what it’s detected.

Note, with the books, that the text is (mostly) sharp, but the gradient images on the spines remain smooth. That’s beyond the ability of a simple sharpen/blur filter.

Alternatively (or additionally), they may be playing some creative games with the camera’s lens/stabilization system to interpolate a higher-than-normal resolution.

We know (from Apple’s announcements) that they implement HDR by shooting multiple images at different exposures, combining the images via neural-net software. If they do something similar for non-HDR images (probably with a single exposure), the images won’t all be perfectly aligned with each other, due to slight vibrations and maybe even deliberate motion by the optical image stabilization hardware. Software can combine these images, synthesizing a higher-than-normal resolution, which can then be downsampled to the image’s size/zoom required.

I don’t know that Apple is doing this, but I have seen the reverse used in so-called “pixel shifting” projectors. These devices produce a 4K image using a 1080p projector element that runs at 4x the frame rate and a mechanism (probably micro-actuators on the projector element or on mirrors in front of the element) to wiggle the image by a half-pixel on each axis, producing a kind of 2-dimensional interlacing. This results in an 4K image that is almost as sharp what you’d get from a native 4K projector element, at a much lower cost.

The effect is a little less dramatic if you compare the 15x zoom with an expanded photo taken with the 3x lens, but it’s still very clearly better.

Apple always seems to position its iPhone cameras to be miles ahead of its competitors, and not just Pro model cameras. It’s a good selling point.

Here are two trial photos I took, looking across our valley. (It was a slightly misty day, African dust.)

The two main shots are taken with the 3x lens and 15x zoom, while the other two are enlarged in Photoshop to show detail. I’ve reduced them all to 2016 pixels wide for smaller file sizes.

3x optical

15x zoom

3x optical detail

15x zoom detail

I think what Apple ios doing here is something I first saw used by Panasonic beforte 2010, and they have improved it in their lateest cameras. After an image is captured from a sensor, the standard conversion of the raw data to JPEG discards a considerable amount of fine detail and fine color. See wikipedia entry on JPEG processing how this is done. If one crops the resulting JPG file and enlarges it, the absence of fine detail is very noticeable. On the other hand, if you (the camera designer) crop first, then adjust JPEG processing to use fewer pixels and discard less detail, the detail in the resulting 2X crop JPEG is very close to the quality of the uncropped standard quality JPEG.

So I’m no graphics guru, and this observation may be what @Eric_Nepean is referring to, but I did my own test with an outdoor shot, and the results were similar to what @drakewords posted above: The 15x looks better than the cropped 3x, but the difference isn’t as dramatic as @ace’s indoor shot of the bookcase.

However, I noticed that the pixel count for my 3x cropped image was (802x602), and the pixel count for the 15x image was, like the original, (4032x3024). Clearly, the results are what matters, but it made me wonder if it’s really so surprising that at a given size, a photo with 25x more pixels should look better? Or maybe I’m not thinking about this correctly.

What I think is amazing is that a 12 megapixel 3x telephoto lens digitally zoomed 5x more than that is still a pretty clear and not “noisy” 12 megapixels.

One thing I didn’t note in my post above is that I did my cropping in the Photos app on the iPhone itself. So I would expect that Apple wouldn’t be converting to JPEG at that point; if nothing else, the images are all HEIC.

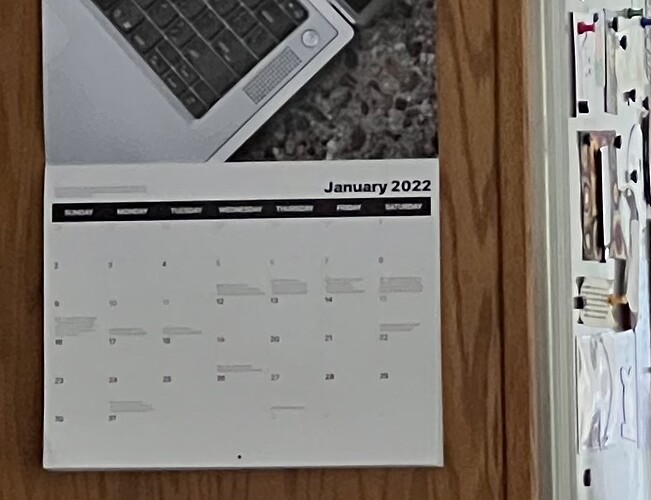

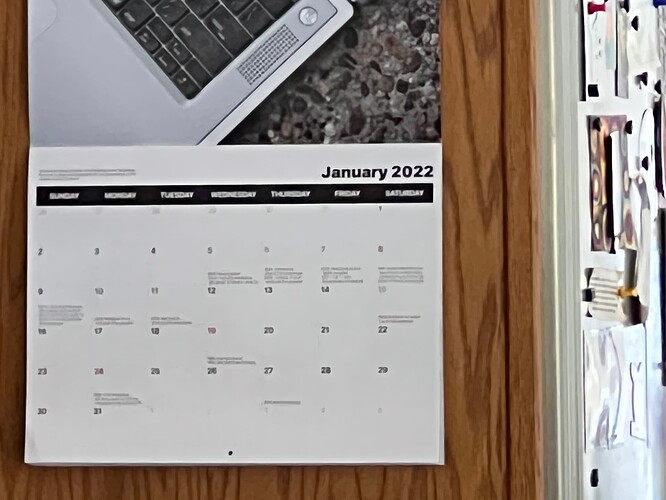

This is a good point, so I did another test, sticking with indoor images that contain text, since they make it a lot easier to discern fine detail. Discourse sizes all the images to 666× 500 to fit. My takeaway is that 15x digital zoom really is worth doing at all times, partly because of Apple’s computational photography, but also because there are just more pixels in the resulting image than if you crop. @jeffc, can you help explain any of this?

1X shot of my kitchen from across the house (4032 × 3024)

3X shot of the same scene (4032 × 3024)

1x crop/expand zoom on Stephen Hackett’s Apple calendar (263 × 197, expanded 255% here in Discourse)

3x crop/expand zoom of the calendar (787 × 604)

15X digital zoom of the calendar (4032 × 3024)

Agreed. Your last two images are very similar to what I saw on my outdoor shot. (And peeking out your windows I can see why you’re sticking to indoor photos at the moment. ![]() )

)

I’ll be throwing away my full sensor Nikon D750, 70-200 zoom and just upgrading my iPhone.

A

I got rid of my beloved Canon SLR a while back when I got tired of hauling a bulky camera and lenses with me all the time. Since I’m not a professional photographer, an iPhone camera fills my needs perfectly.