Originally published at: Apple’s January 2025 OS Updates Enhance Apple Intelligence, Fix Bugs - TidBITS

Apple has released its third major set of operating system updates, including macOS 15.3 Sequoia, iOS 18.3, iPadOS 18.3, watchOS 11.3, visionOS 2.3, tvOS 18.3, and HomePod Software 18.3. Enhancements to Apple Intelligence dominate the release notes, though Apple also fixed a few bugs and addressed numerous security vulnerabilities.

The company also released iPadOS 17.7.4, macOS 14.7.3 Sonoma, macOS 13.7.3 Ventura, and Safari 18.3 for Sonoma and Ventura, all of which include security updates from the current operating systems. For the second consecutive release, Apple did not update iOS 17 to align with iPadOS 17, presumably because all iPhones capable of running iOS 17 can also support iOS 18.

Apple Intelligence Changes

Although we’re still waiting for Siri to gain onscreen awareness, understand personal context, and work more deeply with apps, these updates bring changes to Apple Intelligence notification summaries, Visual Intelligence, and Genmoji.

Notification Summaries

After complaints that Apple Intelligence’s notification summaries generated blatantly incorrect news summaries and misidentified spouses, Apple responded by changing the style of summarized notifications to italics. Previously, the only indicator of a summarized notification was a glyph.

More tellingly, the company temporarily turned off notification summaries for all apps in the App Store’s News & Entertainment category. We presume Apple’s engineers are putting more effort into summarizing news articles and verifying that the results match the source. Apple says that “users who opt-in will see them again when the feature becomes available.”

Finally, Apple made it easier to manage settings for notification summaries from the Lock Screen. On an iPhone, for instance, you can swipe right to reveal an Options button, tap it, and then tap Turn Off AppName Summaries. You can also report a concern with a summary.

Visual Intelligence Adds Scheduling, Plant and Animal Identification

Apple Intelligence enhances the new Camera Control button on iPhone 16 models, allowing it to take action based on what’s in the viewfinder. Initially, it could only ask ChatGPT about what it saw or perform a Google image search. Now, when you press and hold the Camera Control, Visual Intelligence can also detect if you’re pointing at a poster or flyer and suggest creating a calendar event. Additionally, if it recognizes a plant or animal within the frame, it will identify it and provide more information with a tap.

I generally use the Seek and Leaf Identification apps to learn plant names, so once spring arrives, I’ll be curious to see if Visual Intelligence does as well. Poster scanning may be a bigger win because Ithaca is a college town with many events advertised on local bulletin boards.

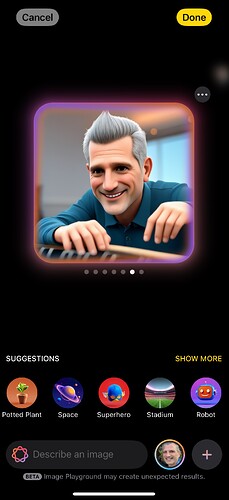

Genmoji Arrive on the Mac

iOS 18.2 and iPadOS 18.2 introduced the custom emoji that Apple calls Genmoji, but macOS 15.2 lacked the feature. With macOS 15.3, the Mac has now caught up. The feature remains the same—you describe what you want to see in a few words, and you can base the emoji on a picture of a person. The Genmoji you create are actually stickers, but you can use them just like regular emojis.

Calculator Gains Repeated Operations

The Calculator app on the Mac, iPhone, and iPad now repeats the last mathematical operation each time you click or tap the equals sign. In other words, if you use it to multiply 2 by 2, clicking the equals sign the first time gives you 4. Clicking it again multiplies 4 by 2, then 8 by 2, then 16 by 2, and so forth. Although I can’t imagine using this feature on a calculator (as opposed to in a spreadsheet), it could be useful for cumulative multiplication or division (such as when resizing an image), calculating compound interest, or modeling exponential growth.

2025 Black Unity Collection

To honor Black History Month, Apple unveiled its Black Unity Collection for 2025, which includes a special-edition Apple Watch Black Unity Sport Loop, a matching watch face, and iPhone and iPad wallpapers. I mention these because the new Unity Rhythm watch face is the main change in watchOS 11.3. Otherwise, Apple merely states that it offers improvements and bug fixes.

Bug and Security Fixes

Apple admitted to only two bug fixes in iOS 18.3 and iPadOS 18.3, which:

- Fix an issue where the keyboard might disappear when initiating a typed Siri request

- Resolve an issue where audio playback continues until the song ends, even after closing Apple Music

Although the macOS 15.3 release notes don’t mention any bug fixes, Apple reportedly resolved the Apple Software Restore bug that prevented SuperDuper, Carbon Copy Cloner, and ChronoSync from creating bootable backups (see “It’s Time to Move On from Bootable Backups,” 23 December 2024). I’ve confirmed that SuperDuper can once again complete a bootable backup, and I assume the others can as well. That said, while I could select my backup drive in the macOS startup options screen, when my M1 MacBook Air restarted, it booted from the internal drive and displayed a kernel panic dialog. When I consulted ChatGPT and Claude about the panic log, they indicated it was related to a missing library. So perhaps Apple Software Restore isn’t fully functional yet.

The remaining releases—visionOS 2.3 (I assume, since Apple hasn’t updated its release notes page), tvOS 18.3, and HomePod Software 18.3—only acknowledge “performance and stability improvements.”

On the security front, here’s the overview:

- macOS 15.3: 57 vulnerabilities fixed, 1 zero-day

- macOS 14.7.3: 38 vulnerabilities fixed

- macOS 13.7.3: 30 vulnerabilities fixed

- iOS 18.3 and iPadOS 18.3: 26 vulnerabilities fixed, 1 zero-day

- iPadOS 17.7.4: 14 vulnerabilities fixed

- watchOS 11.3: 15 vulnerabilities fixed, 1 zero-day

- visionOS 2.3: 18 vulnerabilities fixed, 1 zero-day

- tvOS 18.3: 15 vulnerabilities fixed, 1 zero-day

- Safari 18.3: 7 vulnerabilities fixed

The zero-day vulnerability exists in the CoreMedia frameworks shared by most of Apple’s operating systems. Apple states that a malicious application may exploit this vulnerability to gain elevated privileges. The company says it’s aware of a report that the vulnerability “may have been actively exploited against versions of iOS before iOS 17.2.”

Updating Advice

I’ve been running macOS 15.3 and iOS 18.3 betas for a while with no issues. Although it’s tough to get excited about the new features or bug fixes in these releases, I recommend updating soon since some of these updates address a zero-day vulnerability.