Time Machine back to Synology NAS has worked for me. Of course you should always have more than one kind of backup.

long time listener, first time caller. Appreciate this article and all the knowledgeable comments.

I am trying the option with the 24/7 mac mini with a USB SSD connected devoted exclusively to be the TM drive for my Macbook Air.

I am following the directions in the linked Apple article but no matter how often I reformat the drive and retry it, I get an error when trying to select the SSD on the mac mini as a target. It shows up in my MbAir TM preferences as a viable target, but I get the following error:

“Time Machine Can’t Connect to the Backup Disk / There was an error authenticating with the provided username or password” even when using a known good username/password (that works through the Connect to Server dialog)

Any ideas?

That’s precisely the issue and error I kept getting. I would create two identical shares, one for each laptop with identical permissions. One of them would allow selecting as a TM destination…and the other wouldn’t. Eventually got both to work and they worked until they randomly failed.

At that point I rolled my own CCC solution but it only happens daily. I set up TM with a direct connected drive on both laptops but only plug it in every couple of weeks. The Davao having it set instead of TM off is tht the local TM snapshots keep happening so I can recover something from an hour ago if needed.

If you use the CCC option…make sure to use remote Mac as the destination instead of a mounted share…the latter didn’t work reliably if the laptop was asleep but the former just works.

Thanks Neil I have a similar CCC nightly backup as well, but I’d like to have the hourly versioning and ease of use of a functioning TM backup. Also redundancy.

I also use Arq via SFTP to the same Mac Mini (so it continues to back up when I travel) as well as a Hackintosh that lives in my parents basement across the country.

But I’d really really like to get TM over network. I hate attaching and unattaching the damn USB SSD drive throughout the day — it’s mind boggling that it’s 2022 and we still rely on that.

Thanks Simon. This leaves an issue open. Le 25 janv. 2022 à 16:45, David C. <tidbits-talk@talk.tidbits.com> a écrit :

jean_pierre_smith:

When my last time capsule died, I replaced it by external USB3 small size drives (without individual power supply) …

I would be a bit concerned with this choice of hardware. Particularly:

- I don’t trust bus-powered drives to be reliable. The power delivered by a USB bus isn’t necessarily consistent or sufficient for a high powered drive. Especially if there is a hub involved. I always prefer external power for my backup drives (of course, since I prefer to use 3.5" HDDs, I couldn’t choose otherwise even if I wanted to).

- I’m always concerned about the reliability of pocket/portable drives for extended use scenarios. I think manufacturers try to cut costs wherever possible for these drives, and therefore don’t use the most reliable mechanisms.

This leaves for me, unfortunately, open the question of only powering the backup drives when and if a backup is forthcoming or in progress. Why ? To save energy and in view of my old laCie Big being noisy event when its drives are unmounted.

If you’re replacing your old mechanical HDD with a new SSD you’ll already be saving quite heavily on power. If you want you can disconnect the drive when you’re not using it to force it to power off (probably makes more sense in terms of ransomware protection but whatever). If you prefer to leave it connected at all time, you can connect 2.5" drives through switched USB hubs such that you can actually turn off the individual USB port that drive is connected to. I’m not sure I trust those hubs a whole lot though and my suspicioon is their trickle currents (they like to come with lots of lights and bling) will likely outweigh the minute savings of powering off the already unmounted drive.

There are external cases 2.5" cases that offer their own power input ports so you don’t have to rely on bus power. I have never had an issue with bus power—using quality powered TB hubs or direct connections to Macs that is—but if added protection is your concern, one of these will do the trick. Obviously, you then need to supply it with a reliable quality power supply for this to really add to your protection.

As I’m sure everyone here who uses it already knows, CCC can be set for hourly backup too.

@neil1 I solved this by turning file sharing off and on on my mac mini 24/7 server with the TM target SSD attached. I relaunched the finder on My MbA for good measure. Backing up now. Who knows how long it will last, but small steps

Yeah…I had tried that too and it worked…for awhile but then it failed randomly again on one or the other of the two laptops…I finally decided that if TM over the network was that fussy and finicky it’s not really a good backup scheme at all…hence my roll your own scheme. The really strange thing was that since I was using 2 TM drives…one on my Mac mini and one on my iMac…for both of the laptops and the 4 shares were all set up the same which one broke randomly switched between the two destinations and even when TM said the drive was not available I could easily open up the share for that laptop…so how can it not be available/

Yep…but since for a laptop even the daily ones are multiple GB and I decided it wasn’t worth it to do hourly based on network bandwidth. I might go back and set them up for a couple of times per day though…I didn’t do that originally since the backups to my mini were to a spinning Seagate Backup+ drive and it only writes 20 or 30 MB/sec. Now that I’ve got an SSD on that machine for the shares it’s 10x that fast so even multiple GB backups aren’t really that long. The iMac which is my other destination is writing to a TB RAID so it’s plenty fast even through they’re spinning drives.

Huh, bandwidth point taken in some cases, but does a CCC backup interrupt work while it’s running? I don’t do them hourly and wouldn’t know.

It shouldn’t, although you may notice some CPU and disk usage as it runs (much like you do for Time Machine).

When making a backup of an APFS volume, CCC creates a temporary snapshot, and then it performs an incremental backup of the snapshot. So the backup will contain your drive’s content at the time the backup started (and won’t be affected by files you change while it is running).

V. cool, thanks, David

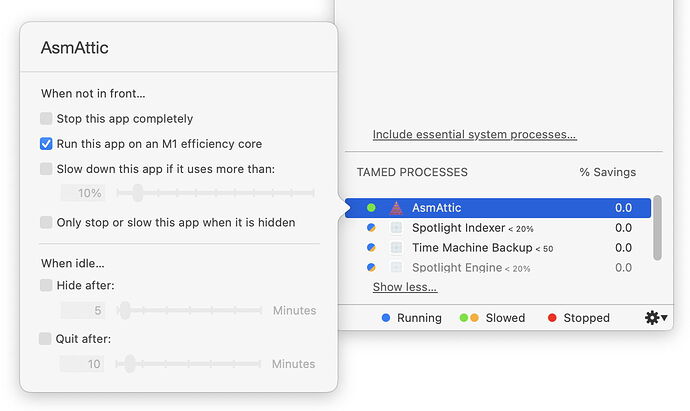

This is a really interesting point for M1 Mac users.

On Monterey we observe that TM runs exclusively on the E cores because it has been set to the lowest Quality of Service level (9). For non-Pro/Max M1s this also means it runs on E cores at their lowest frequency of 1 GHz, they will not ramp up to their 2 GHz peak clock for this thread (on the Pro/Max M1s if there are multiple threads at QoS level 9 [and ignoring things like i/o throttling in the case of backupd], the E cores will ramp up their clock to match the peak E performance of the regular M1 E cluster despite having only half the E cores in its E cluster). One really nice thing about this is that it means it’s very hard for the M1 user to notice TM is running at all—apart from perhaps i/o bottlenecks in certain special cases, whatever you’re actively doing and certainly all the GUI action is driven with priority over what TM is doing in the background. The one downside to this is that there is presently no known method for the user to elevate the QoS level (“promote” a thread using eg. taskpolicy) and get it to run on the P cores if, say for example you’re stepping away from your Mac from an hour and in the meantime you want that initial TM to run through. In such a scenario you’d want it to complete in minimum time rather than at minimum noticeable impact, hence you want the P cores to fire away. But that is something we cannot presently adjust (at least to our present knowledge).

How does CCC deal with this? What is it’s QoS level for its backup threads? If it’s set to higher values it could also run on P cores. The advantage this has is that indeed a user can force it back down to run on E cores (and even later select to return it to E cores) if you prefer the backup to run unnoticed instead of in minimum time, because taskpolicy, or rather the underlying setpriority() function, does indeed work in that direction. Does CCC expose any of this control to the user?

Here’s an example of such an option:

Does anybody have the details on how CCC deals with this on M1?

I’m always on the lookout for interesting Raspberry Pi projects so I setup a Pi 3 as a Time Machine target for an M1 Mac mini. Its storage drive is a 500GB Samsung T5 SSD formatted as HFS+J. It uses Samba. It’s networked over wi-fi.

The Pi is running a, just released from beta, 64-bit version of Raspberry Pi OS Lite, a server edition.

The combination of the modest Raspberry Pi 3 hardware–1GB RAM, 1.2 GHz clock speed, USB 2.0–and wireless connectivity means that backups and retrievals are noticeably slower than those on my previous TM setup with a Thunderbolt-connected SSD. However, after its first few days the system is working reliably, if slowly.

In general, the answer is don’t bother migrating a Time Machine backup from an old drive to a new one. Just put the old one in a drawer in the highly unlikely event that you’ll need to go back to a version of a file that’s on it and not on the newly created Time Machine backup. Eventually, you’ll get bored of keeping it in the drawer and will reformat it and use it for something else.

Very good advice.

In fact, you cannot reasonably do this with TM to an APFS store anyhow because of the way APFS TM stores snapshots and the lack of user-facing ability to copy such snapshots. If you’re still using TM to HFS+, you could in principle clone your old HFS+ store to a newer drive and then use that (in fact a long time ago I did that myself). It works, but these days you don’t really want to be on HFS+ for TM anyway. Go APFS for TM and follow @ace’s advice when it’s time to move to a larger/newer TM drive.

Thanks, Adam. That’s really sensible advice. I’ll do it.

It would be an interesting test to see if you could do it with an image copy. Something like:

-

Connect source and target drives. Make sure the target is equal to or larger than the source

-

Make sure all volumes on both drives are unmounted

-

Perform an image-copy from one device to the other. This should duplicate everything, including the partition table and all file system control structures. For example, if the source is /dev/disk8 and the target is /dev/disk9, you might type:

$ dd if=/dev/disk8 of=/dev/disk9 bs=1m -

When completed, disconnect (or power-off) both drives. Then power-on the target. If the image-copy worked, you should see it mount and its snapshots should be available.

-

Now use Disk Utility to enlarge the APFS container to fill the entire drive (assuming the new one is larger than the old one was)

Assuming this works, you will want to make sure you do not try to connect both the source and target drives at the same time, because they will have identical UUIDs and this may cause problems.

In theory, you should be able to use the internal /System/Library/Filesystems/apfs.fs/Contents/Resources/apfs.util command (type man apfs.util for its documentation) with the -s option to generate a new UUID for an APFS container, but I have no idea if it will actually work, nor do I know how/if it will affect the volume’s ability to work as a Time Machine volume. But if it fails, the worst that should happen is that you may have to re-duplicate the volume (which may take several hours for a large drive, of course).

Have working 2 TB Time capsule ethernet connected to my iMac running Catalina. Works fine but is full which is OK but does not allow adding a second computer…

Have new M1 MacbookPro running Monterey and will use 2 TB external SSD for backup using CCC. Is very fast but needs to be initiated manually.

Have 3 TB Time capsule that is unused. Any way to use it on the network (Uses Verizon Quantum router) for the M1 Macbook - connecting it to existing 2 TB time capsule or??