One of the reasons I upgraded my old iPhone to a 16 pro a few months back was in anticipation of long awaited improvements to Dictation - specifically, I had hoped, (and indeed, thought I had read somewhere in the press), that AI was going to be used to improve the accuracy of Dictation, by doing things like recognizing that you are in a conversation with someone who spells their name differently from the common spelling, and correctly spelling it when you say it, (e.g., I’m talking to Kathryne, but when I dictate, “Hi Kathryne” I get “Hi Katherine”), or recognizing obscure words that you have been using frequently, or that you have just manually corrected, so that you don’t have to keep manually correcting them.

So it’s been frustrating to find that the new 16 pro, even after each of the successive AI releases, seems to be worse at recognizing obscure words than my old 11 Pro had been, and definitely doesn’t seem to be analyzing my data or activity to build any kind of language model for helping with dictation.

While image playground was good for a chuckle, it’s relatively useless in my day to day use of the iPhone - I am constantly dictating technical notes to work colleagues. What I really need is a major improvement in the accuracy of dictation. I’m curious if others are seeing improvements in the quality of dictation - or maybe if there’s a setting I need to adjust in order to allow it to access my data to become more accurate. Thanks!

I’m hoping that the iOS 18.3 update, now in beta and due in late January, will improve dictation because that’s the update that’s supposed to give Siri awareness of our personal context.

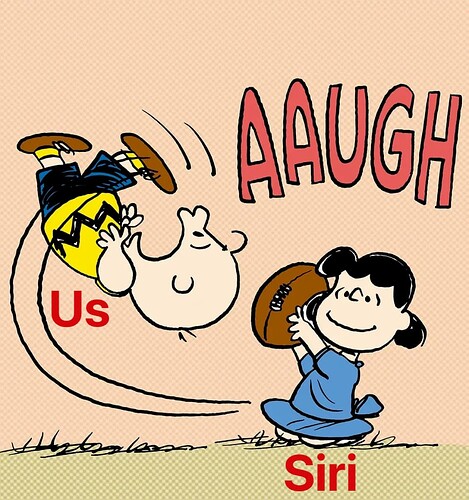

But when it comes to Siri, this is what comes to mind. ;-)

Just saw this in Mark Gurman’s Power On newsletter. It may be a bit of a wait. ![]()

Q: What’s coming in iOS 18.4 next year?

A: First, let’s review recent releases. Version 18.1 was notable because it included the first Apple Intelligence features. Then you had iOS 18.2, which was massive given its inclusion of ChatGPT integration, visual intelligence features and generative AI for images. Apple’s iOS 18.3 — now available in a public beta test — is nothing to write home about. That brings us to 18.4, which is known internally as the company’s “E” release. It should be significant, especially when it comes to Siri. Now, Apple’s marketing implies that the company already revamped its voice assistant, but it’s really only made cosmetic changes so far. That should change by April, when 18.4 arrives. The release will include the revamped App Intents technology that allows Siri to more precisely control apps. Siri will also be able to see what’s on a user’s display and tap other data to better respond to requests. As for a real conversational, AI-based upgrade to Siri, don’t expect that until iOS 19.4 in 2026. (Of course, the company could still announce it well ahead of its release date, just as it did with other Apple Intelligence features.)