2 posts were split to a new topic: Should 2FA always be on two different devices?

Looking at prices and specs, surely it’ll only be a few years before users ask why they can’t plug their USB-C iPhone/iPads into a screen, and use it as a low end Mac mini.

Already exists. Samsung DeX. It probably sucks, and Apple’s implementation will hopefully be better.

Knowing Apple, they’ll remove the iPhone lightning port to avoid such considerations.

That will definitely be a concern a few years after the last Intel-based Mac ships. I think these days Apple’s Xcode is much more dominant for developing software for the Mac and through it Apple can at least encourage companies to keep their binaries “fat.” Another point of influence is Apple could require all Mac App Store applications be ARM/Intel; the store could also deliver to each Mac just the code compiled for that hardware.

No, and I doubt they’d try. Their competition will be Intel graphics, which they can probably already beat. For whatever reason, Apple and Nvidia have parted ways so they’ll need to work with AMD to make Radeon cards work on non-Intel Macs to satisfy the businesses that buy Mac Pros for GPU work.

The problems with Nvidia were very serious, and Apple was dealing with repairs for MacBooks for years. And for some time Nvidia refused to admit their cards were at fault:

https://support.apple.com/en-us/HT203254

In addition to faulty processors, there were supply chain issues that had big impact on Apple’s product delivery:

I wonder if sometime in the future Apple will start developing their own graphics cards?

I agree. Nothing indicates so far they’re trying to replace dedicated desktop-class GPUs similar to what Nvidia or AMD produce.

Considering the competitive advantage Nvidia has been displaying for the last couple of GPU generations, it’s high time for Apple to get real here and put behind petty vendettas. Nvidia offers something nobody else can match. It’s time Apple work to make that accessible to their professional customers who don’t care about bygones. Restricting yourself to a single GPU supplier is silly. It’s essentially putting them in the same boat with AMD as they just got out of with Intel.

A petty vendetta? Apple gets hit with a very, very major class action lawsuit because Nvidia refused to own up to the fact that Nvidia chips were bricking MacBook Pros? This literally cost Apple many years of ongoing bad publicity:

Apple had to take Nvidia to court to force them to admit to the flaws and help Apple pay for repairs Nvidia had to fork over $148.6 million for repairs that stretched over about 6-7 years:

And Apple missed the announced delivery date for its brand new 30 inch Cinema Displays by many months because Nvidia couldn’t deliver the chips on schedule. I linked to this in a previous post.

Then Nvidia yanked Apple into a patent lawsuit with Intel, which Apple had no intention of participating in:

Clearly, this is not “a petty vendetta.” It’s been a smart business move.

LOL. ![]() Good one. The only people who can afford that opinion are those who professionally haven’t had to rely on high-performance frameworks such as CUDA. Or those who can afford to wait twice as long for their GPU to return results.

Good one. The only people who can afford that opinion are those who professionally haven’t had to rely on high-performance frameworks such as CUDA. Or those who can afford to wait twice as long for their GPU to return results.

I think anyone who needs CUDA has already gone PC.

The vast majority of Mac graphics/video/animation users are happy with the alternatives, the overall ecosystem is more important to them.

I’m afraid that’s exactly the point, Tommy. Some Apple fans tend to have this myopic view that GPUs are about FCP. Scientific computing has zero interest in FCP, but it has been heavily involved with CUDA. Apple has lost likely around $200k from my department alone just because of their AMD single-sourcing folly. Apple used to care about scientific computing, now they seem to believe they can do well without. Maybe, maybe not. Regardless, tying yourself to a sole GPU supplier just makes no sense, even if Apple believes the only pros that matter are video studios.

It’s not my field but I thought the performance advantages of CUDA over OpenCL had been overstated. The remaining problem being CUDA having robust ecosystem of software libraries so you don’t have to write nearly so much yourself.

Or the cloud.

That’s been my experience, Curtis. You could attempt to write OpenCL code yourself and if you were really interested you might be able to make it perform almost as good.

People in the field I work in are for the most part not coders. We have scientific problems we are attempting to solve and our interest lies in the results, not the tools to get there. So we tend to use what’s already available, especially if that stuff is really good. If there are highly efficient CUDA libraries around or if we have ML tools nicely tailored to a small farm of Teslas that’s what we’ll want to use.

Could it be done in OpenCL or on AMD? Probably. Will people go the extra mile? No. Already before we did lots of computation on clusters, but there was always a fare amount of ‘local’ work, primarily testing and benchmarking. I’d say about half of my colleagues have now migrated to doing all of that on Linux boxes simply because Apple has been being dicky about GPU support. Especially those doing lots of ML. My corner of UC Berkeley is becoming quite a Google shop these days so common tools like Word or Keynote have effectively been replaced by Google docs anyway and they run in the same Chrome browser on any platform. These people have less and less reason to stay with Macs. Personally, I really like Linux and spend a lot of time using Linux tools, but I would definitely prefer I could do it all on my Mac rather than having to run several boxes. That’s probably niche. But of course so are those mixing rap music videos on $40,000 workstations that require $1000 wheel kits. ;)

I mentioned this once before, my cousin is an astrophysicist who has been working with NASA for decades and is also a professor at a major university. He loves Macs and Apple products as much as I do, and he’s always said how much they love Macs at NASA, and how the Curiosity Mars Rover was basically a G3 9600 Mac:

A radiologist friend of the family runs most of his practice on Macs, uses this software that he says is popular around the world:

An Optometrist neighbor uses this, and the company makes Mac software for other medical specialties:

A speech pathologist friend who is involved in research always talks about how important iPads and Macs are in the field.

Apple’s Research Kit and Care Kit for iOS and Macs are making headway in the medical and healthcare studies, as well as in communication between providers and patients:

There are many scientific fields that depend on visualization, rapid rendering and communication, as well as other fields that do not use CUDA.

Honestly thought CUDA was an old switch/button on the Mac motherboard.

Honestly thought CUDA was an old switch/button on the Mac motherboard.

That, too!

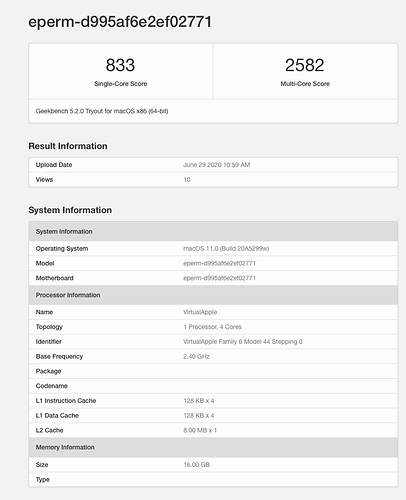

As could be expected, first benchmarks of the DTK have come out. It appears Rosetta only exposes 4 ARM cores (the performance cores?). Geekbench says on average 811 and 2,871 and that’s emulated x86!

Here’s a list of updated benchmarks.

My iMac has nothing to fear…

I too hope the support for Intel-based Macs lasts at least five years. I just replaced my 2013 MacBook Pro with a new 2019 MacBook Pro. I would like to get at least five years out of my recent purchase.

I’ve talked with developers, and they are all saying that running Intel based software on the A12Z chip with Rosetta 2 software runs almost as fast as a new MacBook Air. You must consider this:

- This is Intel emulation on an ARM chip.

- This is beta software that has not been optimized.

- This chip is last year’s model and isn’t the current A13 chip that is about 20% faster. And, of course, Apple will soon introduce a new A14 chip that’s probably even 20% to 25% faster.

- This chip was built specifically for the iPad Pro and not a Mac. Apple will have a special Mac version of the A14 chip just like they have a more powerful A14 version for the iPad over the iPhone.

So, running in emulation on a non-optimized emulator on a chip not designed for a Mac and is a generation behind the current chip is almost as fast as running on actual Intel hardware. That’s really impressive. Imagine the speed when running software compiled for ARM on a chip that’s designed to be used on a desktop machine.

When I first heard about Apple running Macs on this chip, it didn’t sound right. I figured that Apple was more likely making a desktop based iPad that would be less locked down than iPadOS.

When Apple went from the PowerPC to the Intel chip, the Mac platform was just renewed with OS X. The PowerPC chip couldn’t keep up with Intel and was at a dead end. Motorola wasn’t going to make a new generation of the PowerPC chip. Plus, running on an Intel chip would allow Windows and Linux virtualization — something extremely important in this world. It was a matter of survival for Apple to move from the PowerPC to Intel.

It is very different today. macOS is now over two decades old. Apple has a second generation platform in iOS/iPadOS running on ARM that is slowly moving into the desktop space. Intel’s i86 isn’t at a dead end. Intel is still working on the future generations of their chip, and even without the Mac, 90% of all computers will still use it. And moving the Mac to ARM may mean that developers who have used a Mac because they like the hardware, but need Windows emulation on a i86 chip will no longer be able to use new Macs. That’s a significant chunk of the Mac market.

There is only one reason for Apple to go through this trouble and tumult: Apple expects the new ARM Macs to blow every other desktop computer out of the water. Apple expects these new machines to be so much faster and so much more efficient, and the developer beta machine is barely hinting at what we can expect.