Hi guys!

Question for you.

How much cache space does your backup software burn on your local drive to keep track of what has been backed up?

I’m using Arq, and mine is >50GB right now. The cache can be found in:

/Library/Application Support/ArqAgent/cache.noindex

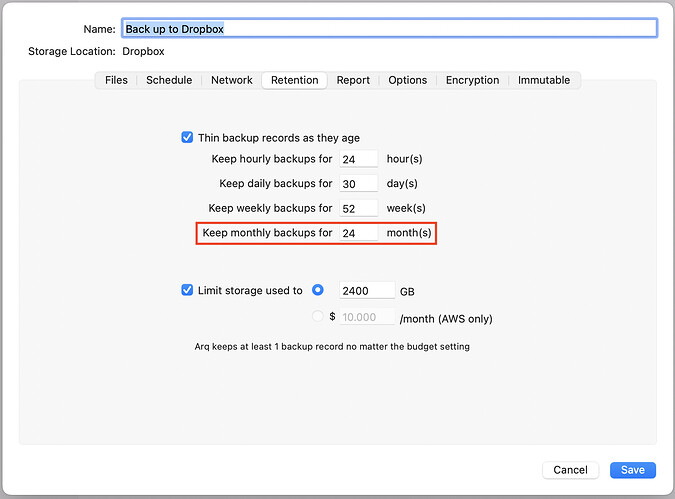

According to the developer, this size is based on the number of files and the number of backup records, where one backup record appears to log each time it backs up, noting that it retains historical files, and thins aging backups over time.

He also confirmed that bundles like a Photos Library treat each enclosed file as an individual. That’s preferred for incremental backup efficiency, but clearly will make this cache much larger, especially for someone with 115,000 photos and videos in my library.

So I did some math to see if 50GB was a reasonable size by counting the number of files of the two directories I backup:

sh-3.2# pwd

/Users

sh-3.2# find . | wc -l

1278154 (x44 backup records)

sh-3.2# pwd

/Volumes/Photos Image 2

sh-3.2# find . | wc -l

402696 (x41 backup records)

Multiplying:

files x backup records = 72749312

Dividing this into 50GB, gives me about 690 bytes per entry. I don’t know what goes in there, but that seems a little bloated to map an inode, a backup record ID, and possibly a date/time. But I’ll grant there’s plenty I don’t know here.

But I’m curious what size cache you guys are harboring? The much more challenging question will be if you can normalize that size by the number of files you have (and the number of backup records, if your software works the same way). My little shell commands could help you arrive at the former, if you feel adventurous!