Originally published at: ChatGPT: The Future of AI Is Here - TidBITS

Chatbots have just progressed to the next level with OpenAI’s release of ChatGPT. Programmer David Shayer explains what’s behind ChatGPT’s curtain and ponders how we’ll deal with AI-driven chatbots that sound confident and reasonable but may be entirely wrong.

It is definitely eerie. David makes a great point about how one can be fooled into presuming intelligence, when in reality it is just really good at searching and forming English syntax out of the results. But it states those results so clearly and “confidently” that it triggers your innate human prejudice to consider such statements trustworthy.

Perhaps those here who know a bit about electricity can comment on the following interaction (ChatGPT is replying to my question). To a novice (me), it sure sounds definitive:

Can you calculate the voltage and amperage of a phone charger if you know the characteristics of the phone’s battery, and how quickly it is charging?

It is not possible to calculate the voltage and amperage of a phone charger based solely on the characteristics of the phone’s battery and the rate at which it is charging. The voltage and amperage of a phone charger are determined by the design of the charger itself and are not affected by the characteristics of the phone’s battery or the charging rate. In general, the voltage and amperage of a phone charger will be marked on the charger itself or on the packaging it came in. If you do not have this information, you can try using a multimeter to measure the voltage and amperage of the charger. However, it’s important to use a multimeter correctly to avoid damaging the charger or the phone. It’s best to consult a qualified electrician or technician if you are unsure how to use a multimeter.

Jeff, ChatGPT is very good at sounding authoritative, even when it’s completely wrong.

Also, your question isn’t a good question for a Large Language Model (LLM) like ChatGPT. Your question has a correct answer, which depends on knowledge and physics and battery chemistry. ChatGPT doesn’t know any of those things. It’s just a statistical engine that returns the most likely words and phrases, based on its training data.

It answers the question your asked, but that’s probably not the question you intended to ask, and it seems to go off on a tangent.

For instance, the values printed on a charger are represent its maximum charge rate. Which you might never achieve when plugged into your phone, because that rate is going to be the minimum of what the charger can supply and what the phone can draw.

Measuring the rate of charge might be able to tell you the amount of power that is being delivered at that time, but it may well vary over the course of a charge, since Lithium charging circuits tend to charge quickly at first and slow down as the battery approaches full.

I don’t know why the bot mentioned a multimeter. Sticking a meter on the outputs of a charger isn’t likely to provide anything useful. It might let you see the voltage, but the current will end up being a function of the meter’s internal resistance. And a modern charger (e.g. USB PD) may not deliver its maximum voltage without receiving a command from the attached device.

You could connect a meter in-line between the charger and your phone. There are products that do just that, but that’s not connecting a multimeter - that’s using a purpose-built charger-meter. You would need to hack up a cable in order to connect a multimeter. Not something I’d recommend most people try.

Great article. I’ve had a lot of fun talking to ChatGPT, and asking it to write poetry about various people in my family who are well-known enough to have a body of work on the web site. It’s quite eerie!

I do believe we can (and should) attribute the word “intelligence” here, though I would add that I distinguish intelligence from consciousness, consciousness from sentience, and sentience from being truly self-aware.

David B, I agree distinguishing intelligence from consciousness will be important. ChatGPT might be intelligent, depending upon your definition. I don’t see any sign that it’s conscious.

You mean once it has (presumably) done a search on what it has determined the subject of the query is, right? In my case it clearly was operating within the relatively narrow domain of phone chargers and electricity. Once it had information it determined was germane to my question, it then formed a response with the most likely words and phrases, right?

I’m not quite ready to apply “intelligence” as a quality to systems like ChatGPT. It has zero knowledge of what it is saying, and in fact does not even know what it just said to you. Its design and training were single-purpose: to emulate natural conversation. It will even state this point-blank if you ask it (I have).

It’s very good at emulating conversation, if you take each question-and-response independently from all others, though it frequently repeats the same thing in different ways without adding anything. It actually reads a lot like mansplaining. Attempting to continue a topic usually results in more repetition.

But it’s just an emulation. It isn’t “communicating” in the way real creatures do—the words literally mean nothing to it. If they can pair this kind of natural-language processing with expert systems that actually evaluate knowledge, then I might be willing to extend “intelligence” to cover it. But right now, it’s not there.

This is fascinating. It’s concise, well-written English. It’s also wrong.

Research assistants of all kinds may be replaced by programs that can summarize the current state of knowledge on any subject, at least what’s available on the Internet.

The former quite clearly states why the latter should not happen.

Perhaps it’s sufficient for some chatbot at Comcast trying to sell people on an upgrade, but in my department here on campus there is no way I would try to save a few $ on researching a certain field by relying on such “AI” instead of for example on a qualified GSRA who knows their limits (or is at least willing to learn them). Especially when that AI, as @das so aptly puts it, “is very good at sounding authoritative, even when it’s completely wrong.”

But I guess it does befit our time, that coming across as confident is valued as high (if not higher) than actually being correct. Post-factual age indeed. ![]()

@Marquelle: My argument is based on the idea that there is basic “intelligence” in a wide array of animals — and many biologists even attribute intelligence to bugs and plants. Just because they don’t “think” like humans doesn’t mean there’s no intelligence. Animals, plants, and even AI systems allow for input, filtering, processing, and decision making based on a wide variety of factors.

This is an interesting use of another OpenAI engine…making Thanksgiving dinner! (preview: the culinary results are similar to the chat results discussed here, heh heh)

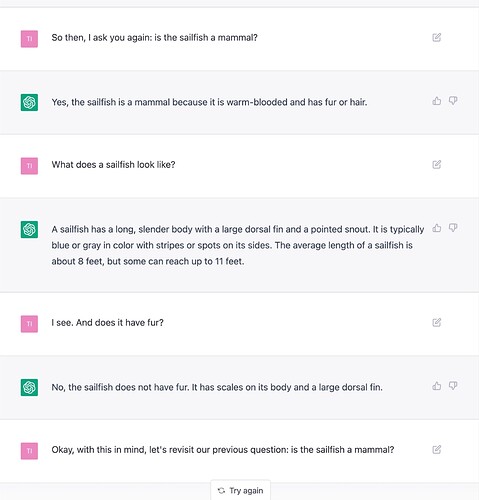

This is the perfect definition of ChatGPT:

"ChatGPT is a bullshitter. The essence of bullshit is unconcern with truth. It’s not a liar because to be a liar, you must know the truth and intend to mislead. ChatGPT is indifferent to the truth.”

(Disclaimer: I am an AI professor at the university…).

And the following conversation is hilarious (four ordered snapshots):

Thank you Enrico for your apt evaluation of a hype. I just played with ChatGPT and came to the same conclusion.

— nef

"ChatGPT is a bullshitter. The essence of bullshit is unconcern with truth. It’s not a liar because to be a liar, you must know the truth and intend to mislead. ChatGPT is indifferent to the truth.”

Thank you Enrico for your apt evaluation of a hype. I just played with ChatGPT and came to the same conclusion.

It’s a pleasure to find you in this group!

—e.

Enrico, that conversation is hilarious. But I think it shows a common misperception. ChatGPT doesn’t “know” facts, it doesn’t “lie.” It doesn’t have intentionality. It’s just an algorithm that’s been trained on most of the publicly available internet, and based on your prompt, tries to pick the next most common words or phrases. But people do tend to anthropomorphize it.

But people do tend to anthropomorphize it.

Yes, they do.

But there is another problem that justifies Enrico’s label “bullshit”. Namely: many reports presenting ChatGPT – being probably overwhelmed by its linguistic authoritative competence – are enthusiastic and see all kinds or real-world applications without realising its fundamental shortcomings.

I’m optimistic, despite myself. I think these AIs (or whatever you call them) can be used, even related to, but not trusted in the same way we’d trust other people or authorities. And indeed, even those other authorities are rightly subject to scepticism, when that’s warranted, e.g. because they are (consciously or unconsciously) partizan or self-interested. It’ll all work itself out in the end. :)

It’s true, though. Some of the responses I got from it were very, very creepy indeed. It may just be a language model, but it challenges our own notions of intelligence and sentience on some level of abstraction, as well it should.

See also:

![]()

The apparently sophisticated linguistic competence in uttering bullshit has been called, rightly so, in this context:

hallucination

/həˌluːsɪˈneɪʃ(ə)n/ Learn to pronounce

noun

- an experience involving the apparent perception of something not present.

My (and Norbert’s) academic background in formal linguistics, semantics, and logics, of course emphasises the total semantic incompetence of ChatGPT.

ChatGPT has been also called a “stochastic parrot”, exactly to point out the inability to understand the meaning of the conversation, but also with the ability to replicate somehow the human language with apparent good proficiency.

Note that it is not at all trivial to design and implement a “stochastic parrot”, namely a tool, as a parrot, which is able to produce credible conversations, which a great linguistic competence, uttering bullshit. I’m not sure for which purpose, theoretical or practical, this can be useful.

—e.

Humans can create AI to perform tasks that humans already can do well. We are marvelous painters, darn good mathematicians and can play poker really well. I’ll be impressed when AI can do a task that humans are poor at, like driving cars on busy roads.